We tested every major AI mastering service so you don't have to. Here's what actually works, what's hype, and what'll make your tracks slap.

TL;DR

AI mastering has come a long way but it's not magic. LANDR, eMastered, and CloudBounce all have strengths — but knowing when to use them versus a human engineer is the real skill. We break down pricing, quality, and when each one makes sense.

Why AI Mastering Has Become a Serious Option

Five years ago, suggesting AI mastering to a serious producer would've got you laughed out of the studio. Fair enough — early versions were basically glorified limiters with a marketing budget. But the landscape has shifted dramatically.

Modern AI mastering tools use machine learning trained on tens of thousands of professionally mastered tracks. They analyse your mix's frequency balance, dynamics, stereo width, and loudness, then apply processing chains that would've taken a human engineer real time to build. The results in 2025 are genuinely impressive for certain genres.

That said, let's be honest: AI mastering still isn't a replacement for a skilled human engineer working on your debut album. But for singles, demos, DJ edits, and content releases? It's become a legitimate part of the independent artist's toolkit. The question isn't whether to use it — it's when and how.

Head-to-Head: LANDR vs eMastered vs CloudBounce vs Dolby.io

We ran the same five tracks — a hip-hop beat, an indie rock mix, an electronic banger, a folk acoustic piece, and a pop vocal track — through every major service. Here's the honest breakdown.

LANDR remains the most well-known and their 2025 update introduced genre-specific processing that actually works. Their hip-hop and electronic presets were the strongest in our test, adding warmth and punch without crushing dynamics. Pricing starts at about £4 per track or £8/month for unlimited.

eMastered gave us the most transparent results — tracks that sounded like better versions of themselves rather than processed through a machine. Their reference track feature is genuinely useful: upload a commercially released track you want to match and their AI gets surprisingly close. At £9/month it's decent value.

CloudBounce felt the most conservative, which is either a pro or con depending on your perspective. Less likely to mess things up, but also less likely to wow you. Dolby.io's mastering API is the dark horse — technically focused, but producing consistently clean results.

When to Use AI Mastering (And When to Hire a Human)

Here's the rule we'd suggest: if the track matters to your career trajectory — your lead single, your EP, anything going to radio or sync libraries — invest in a human mastering engineer. You'll spend £50-150 per track with a good independent engineer, and the difference in nuance, stereo imaging, and dynamics is still noticeable.

For everything else — Spotify-only singles, SoundCloud releases, DJ edits, content for social media, demos you're sending to labels — AI mastering is perfectly fine. It'll get you 80% of the way there at 5% of the cost, and in a world where most people listen on earbuds and phone speakers, that last 20% often goes unnoticed.

The hybrid approach is increasingly common too. Some artists use AI mastering as a starting point, then make manual tweaks in a DAW. Others use it for A/B comparison — master with AI, then send to a human engineer and compare. It's a tool, not a replacement.

Pro Tips for Getting Better Results from AI Mastering

The single biggest factor in AI mastering quality isn't the tool — it's your mix. AI mastering can't fix a bad mix, and it'll amplify problems that a human engineer might catch and correct. Get your mix as good as possible before uploading.

Leave headroom. Seriously. If your mix is already slamming the limiter at -0.1dB, no AI in the world can master it properly. Aim for peaks around -3dB to -6dB and you'll give the algorithm room to work. Also, export at the highest quality your session allows — 24-bit WAV minimum.

Use the reference track features when available. These tools are pattern-matching at their core, so giving them a target dramatically improves results. Pick a commercially released track in the same genre with a sound you like, and let the AI aim for that ballpark.

Finally, always A/B test. Listen to the mastered version against your mix at matched loudness levels. If the mastered version sounds worse in any way — more harsh, less dynamic, weirdly compressed — try different settings or a different service. Don't just accept the output because a computer made it.

The Future: Where AI Mastering Goes From Here

The trajectory is clear: AI mastering will keep getting better. We're already seeing tools that can handle stem mastering — processing individual elements of your mix separately before combining them — which was previously only possible with human engineers and expensive software.

Apple's Spatial Audio push means we'll likely see AI tools that can master for Dolby Atmos and spatial formats, opening up immersive audio to bedroom producers who can't afford a dedicated Atmos suite. That's genuinely exciting for independent artists.

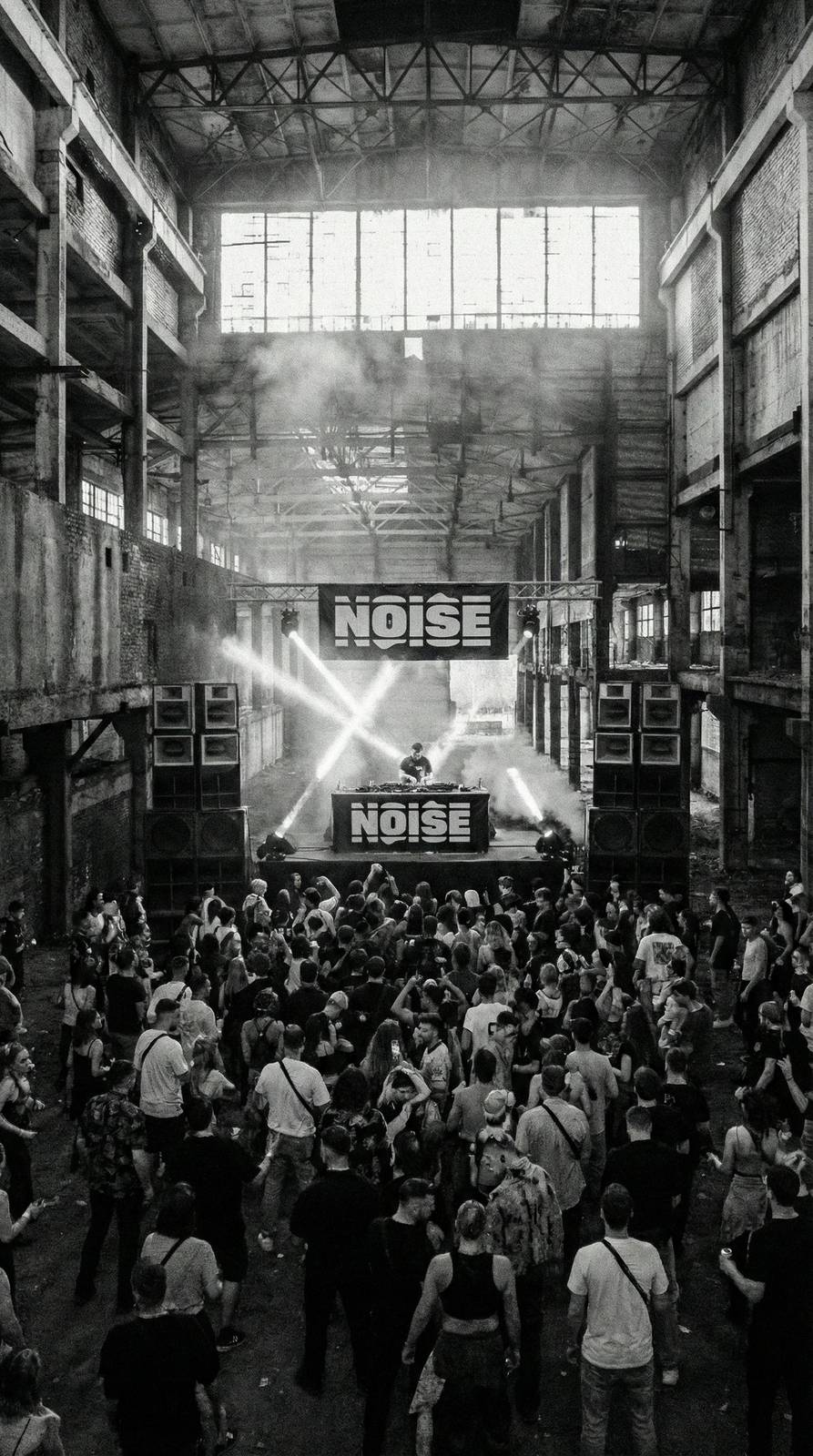

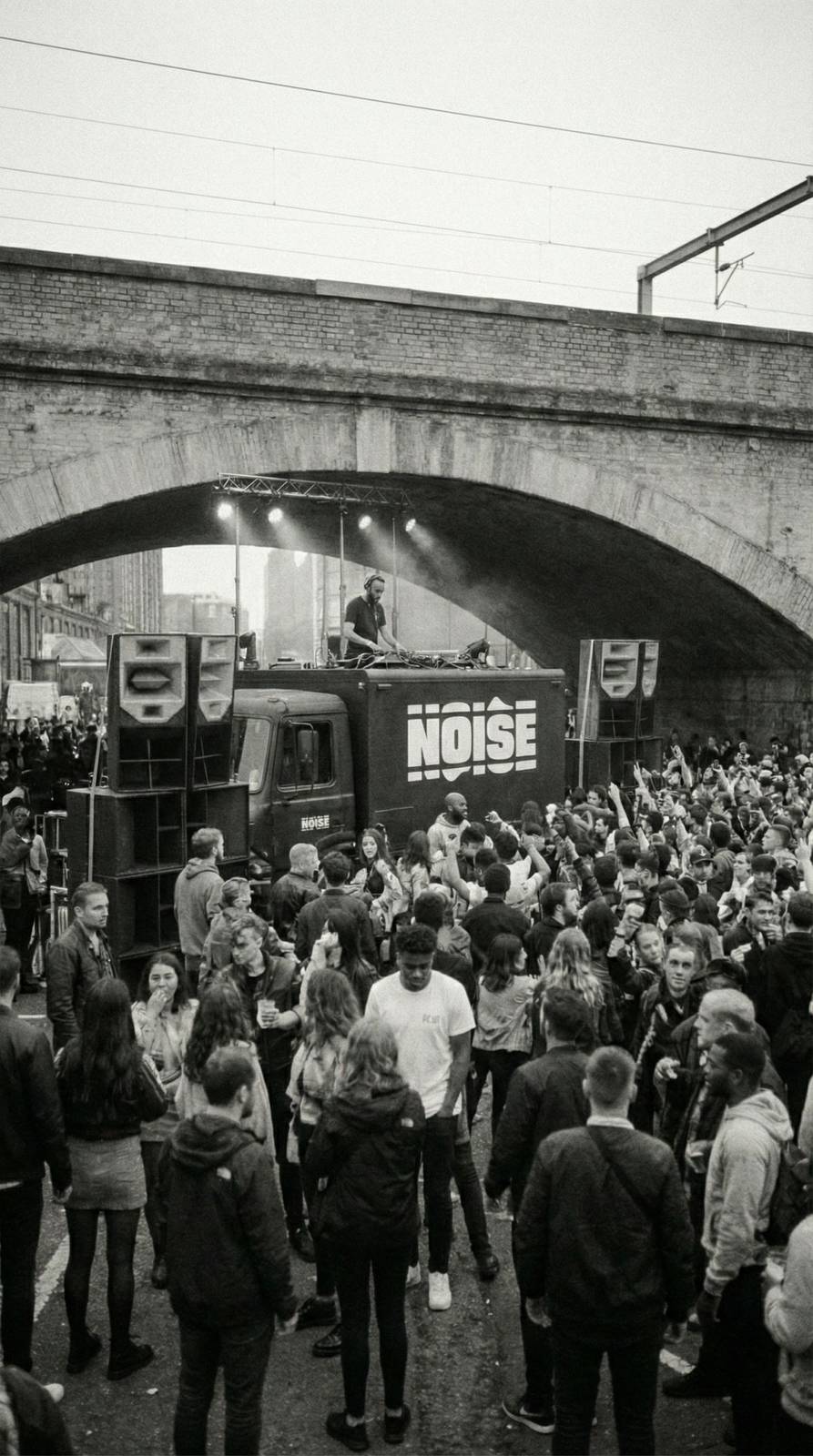

But here's the thing we care about most at Noise: accessibility. Every improvement in AI mastering is a step toward democratising music production. When a 17-year-old in their bedroom can get a professional-sounding master for a few quid, that's more music reaching more ears. And that's always worth championing.